Compute model

The conceptual figures below explain the general concept of the higher-level processing strategy, compute model, and nested parallelism. The individual sub-figures can be enlarged by clicking on them.

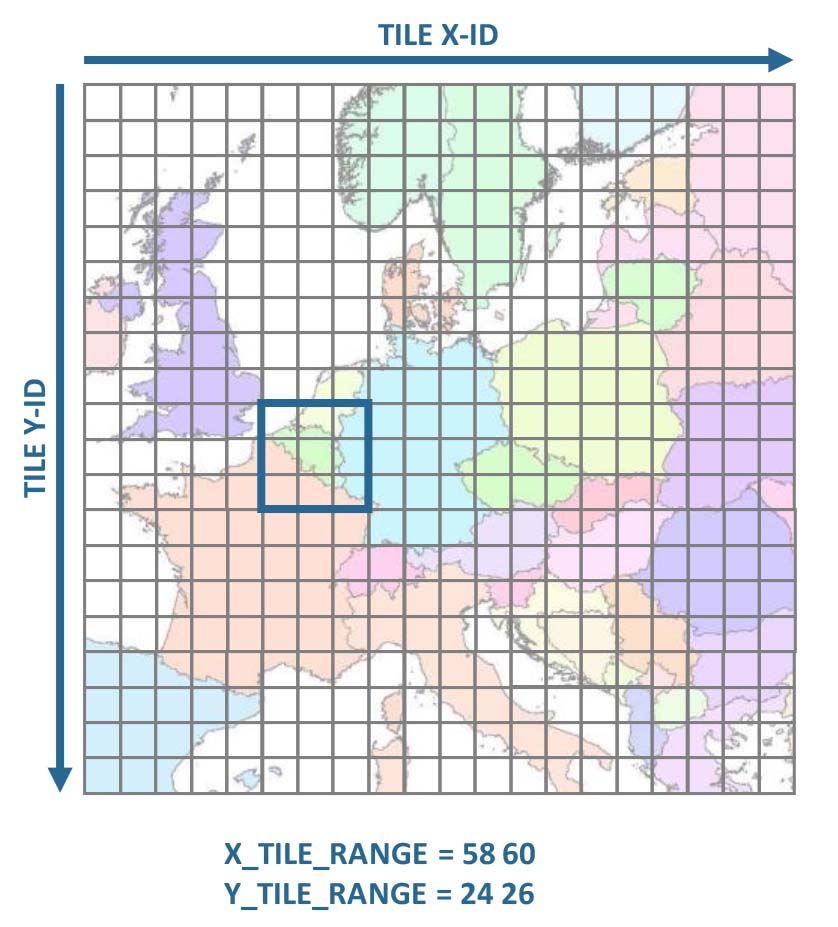

The cubed data are stored in a grid system. Each tile has a unique tile ID, which consists of an X-ID, and a Y-ID. The numbers increase from left ro right, and from top to bottom. In a first step, a rectangular extent needs to be specified using the tile X- and Y-IDs. In this example, we have selected the extent covering Belgium, i.e. 9 tiles. |

||

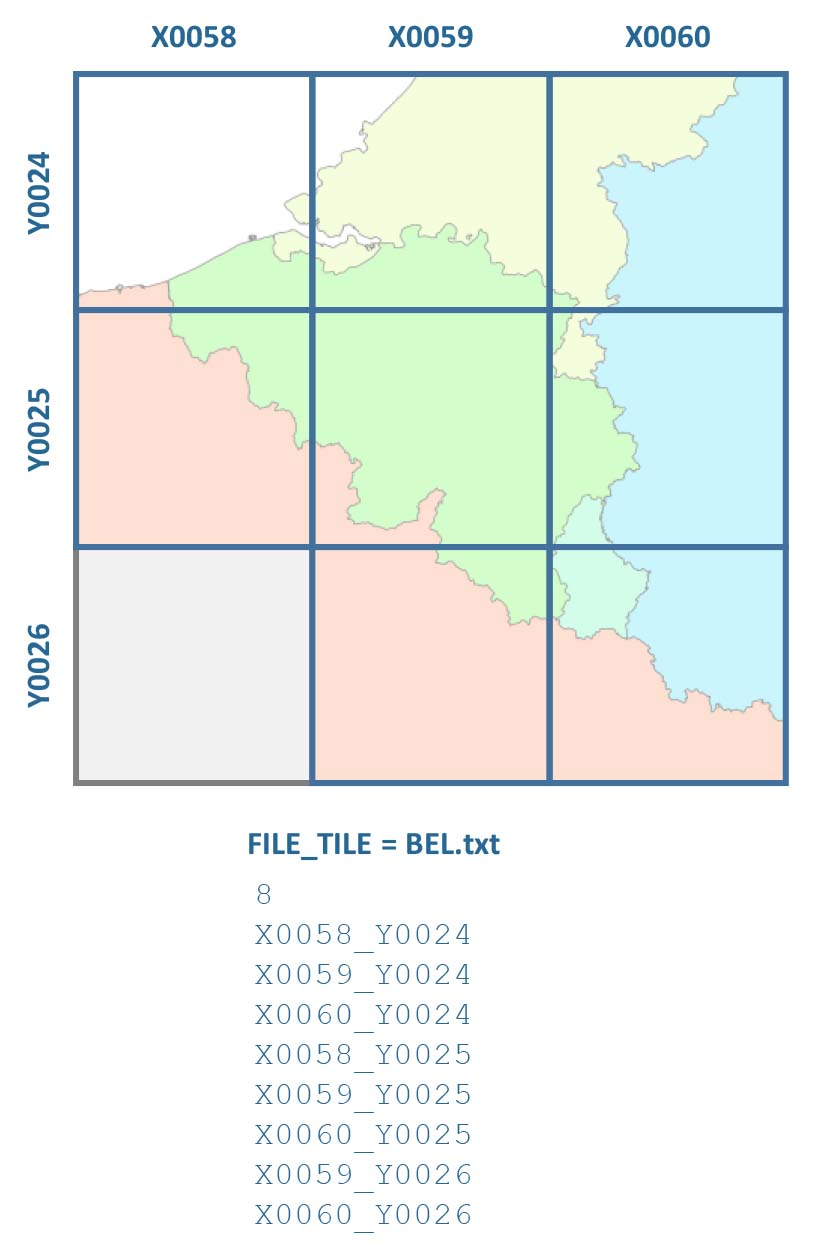

If you do not want to process all tiles, you can use a Tile allow-list. The allow-list is intersected with the analysis extent, i.e. only tiles included in both the analysis extent AND the allow-list will be processed. This is optional. |

||

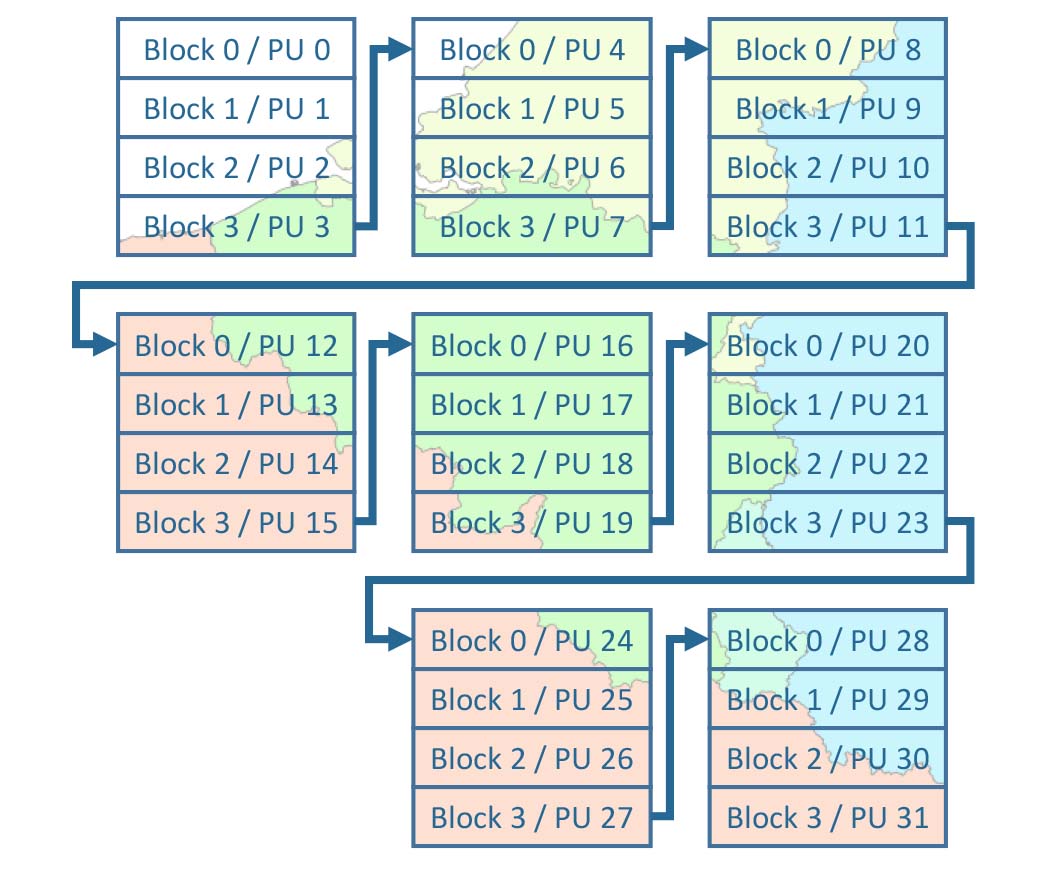

The image chips in each tile have an internal block structure for partial image access. These blocks are strips that are as wide as the |

||

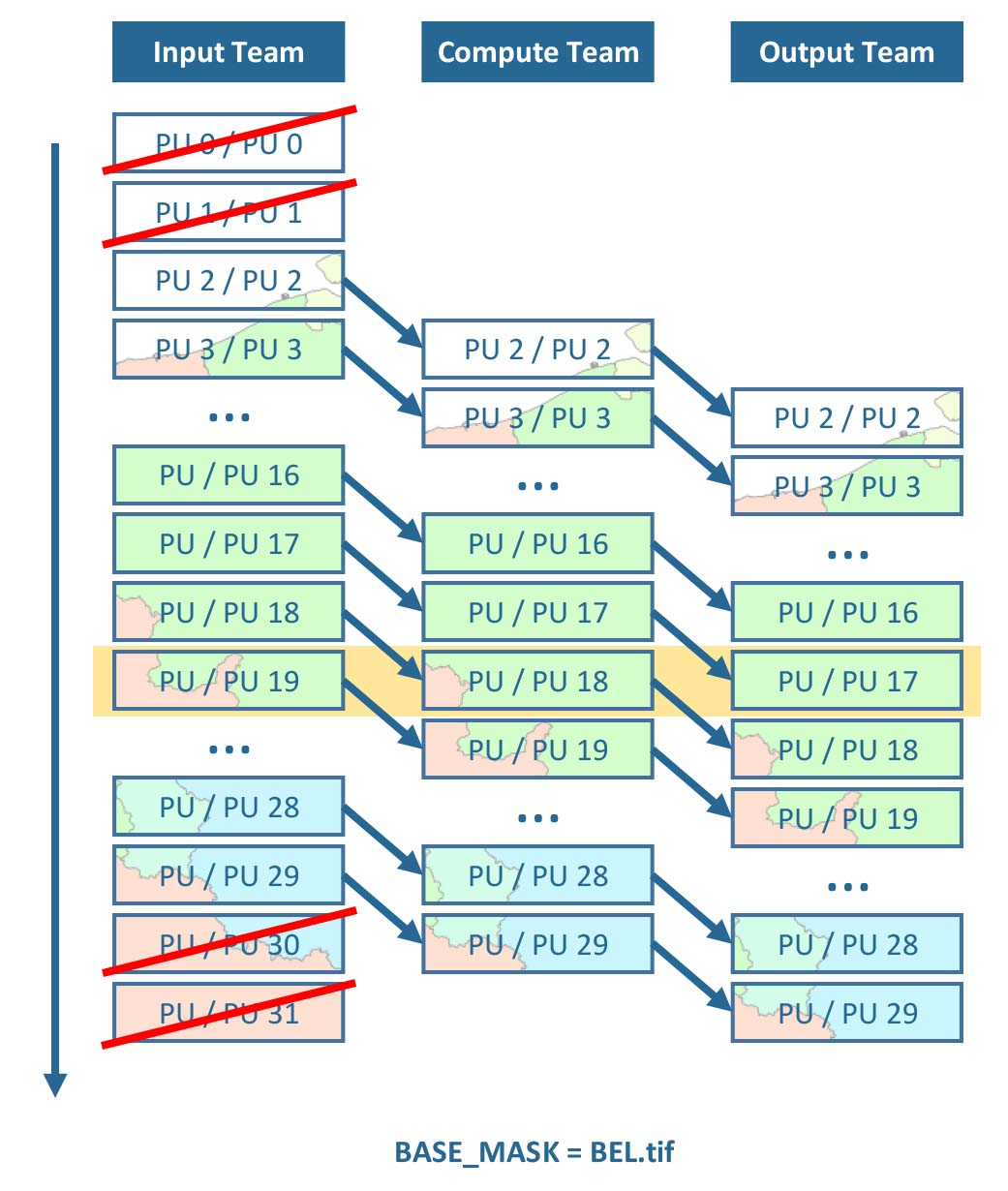

FORCE uses a streaming strategy, where three teams take care of reading, computing and writing data. The teams work simultaneously, e.g. input data for PU 19 is read, pre-loaded data for PU 18 is processed, and processed results for PU 17 are written - at the same time. If processing takes longer than I/O, this streaming strategy avoids idle CPUs waiting for delivery of input data. Optionally, Processing masks can be used, which restrict processing and analysis to certain pixels of interest. Processing units, which do not contain any active pixels, are skipped (in this case, the national territory of Belgium). |

||

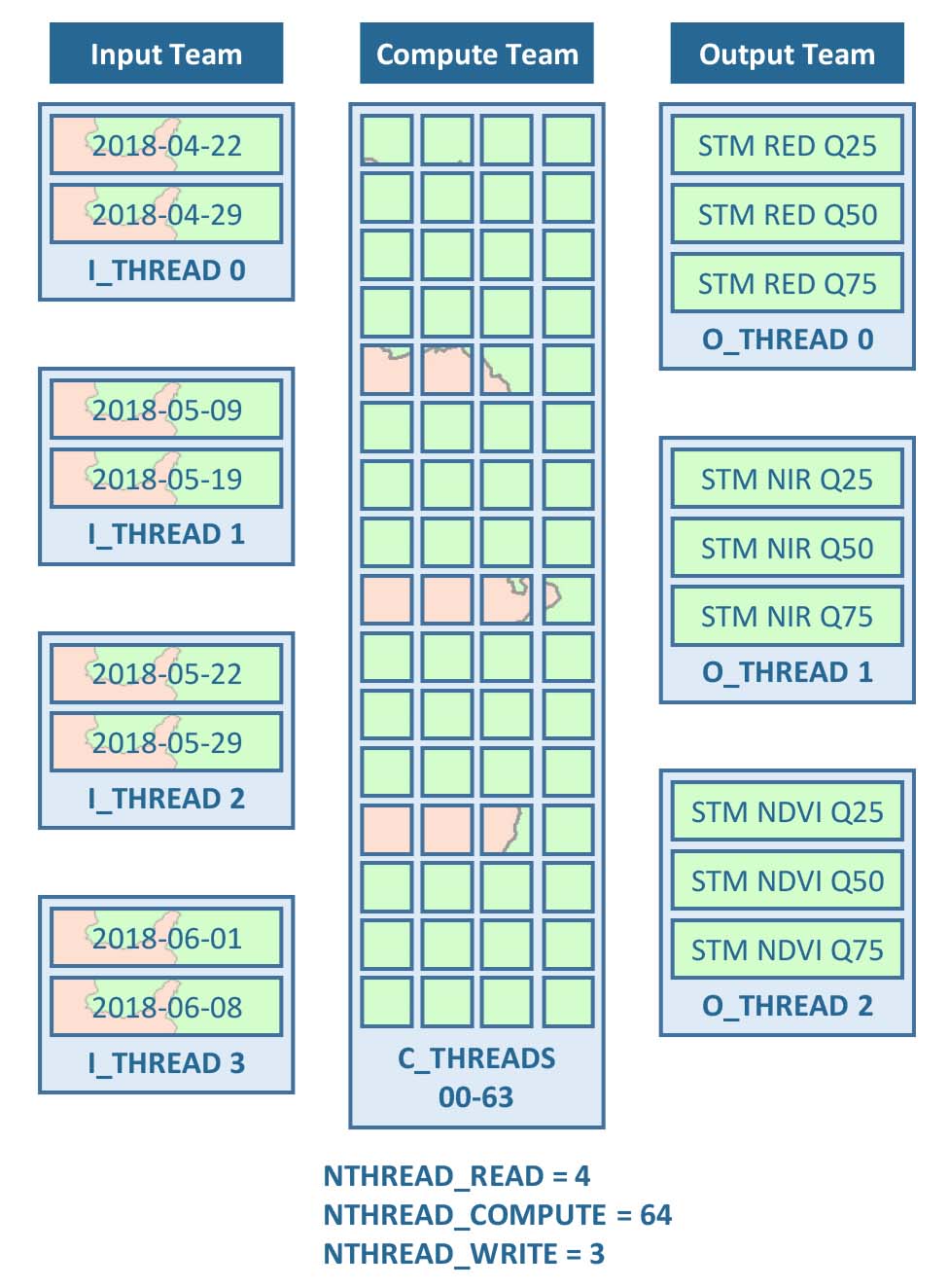

Each team can use several threads to further parallelize the work. In the input team, multiple threads read multiple input images simultaneously, e.g. different dates of ARD. In the computing team, the pixels are distributed to different threads (please note that the actual load distribution may differ from the idealized figure due to load balancing etc.). In the output team, multiple threads write multiple output products simultaneously, e.g. different Spectral Temporal Metrics. |

||